【论文阅读】SentiBERT: A Transferable Transformer-Based Architecture for Compositional Sentiment Semantics (ACL 2020)

Task

Sentiment Analysis

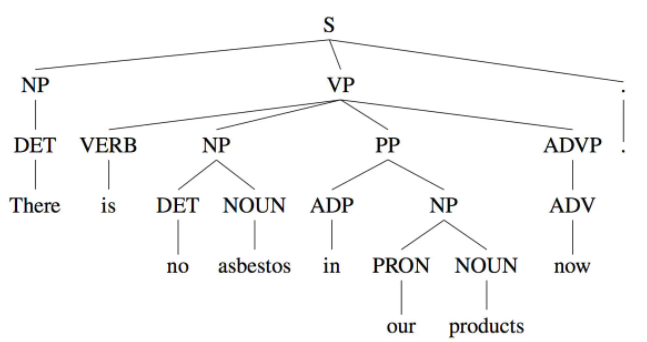

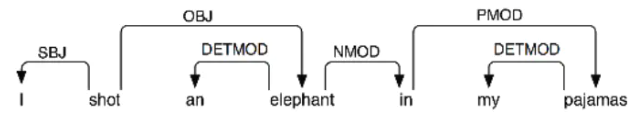

树结构

- Constituency tree(句法树),用句法规则,递归的构建出树的结构来表示一句话,其只有叶子结点与输入句子中的词语相关联,其他中间结点都是标记短语成分。

- Dependency tree(依存树),用单词之间的依存关系来表达语法。如果一个单词修饰另一个单词,则称该单词依赖于另一个单词。

Motivation

The sentiment of an expression is determined by the meaning of tokens and phrases and the way how they are syntactically combined.

Sentiment = 语义 + 句法

Model

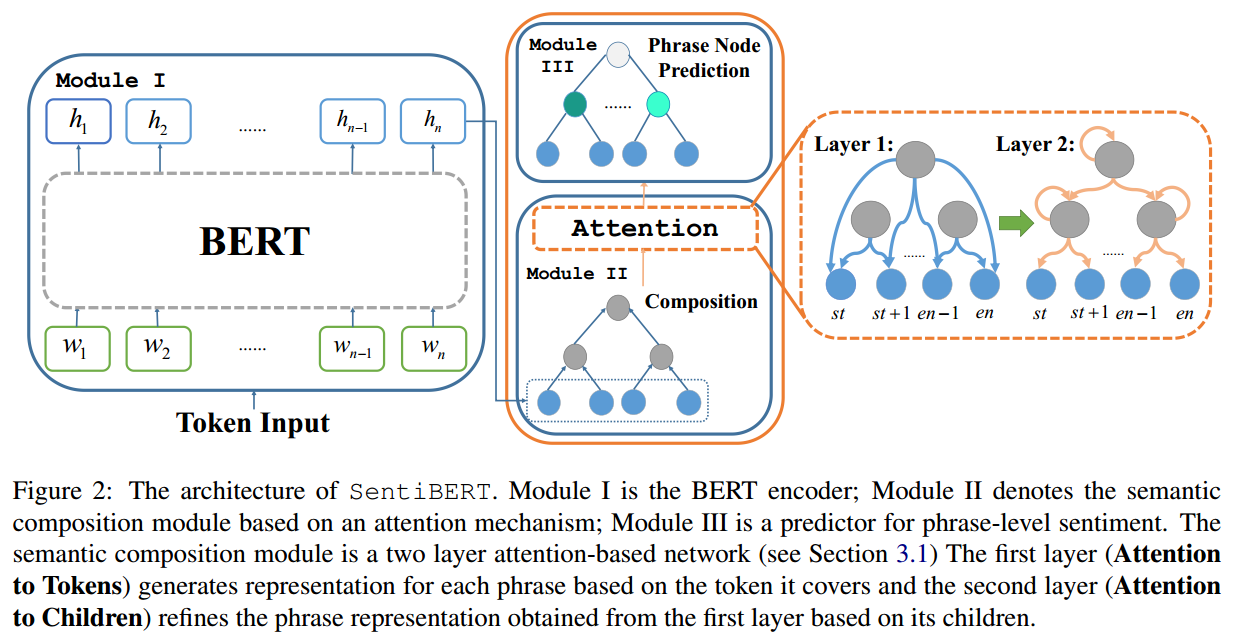

- 对于一个sentence,预先得到binary constituency parse tree。

- 用BERT得到每个单词的语义。

- 使用Attention进行基于binary constituency parse tree的语义融合。

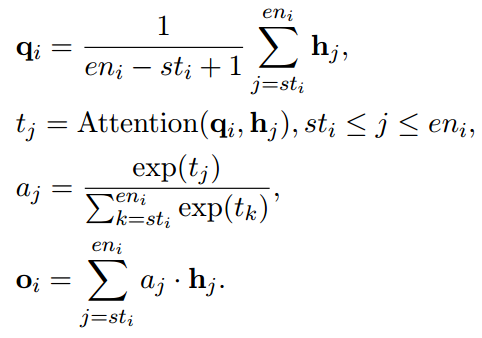

- Attention to Tokens

将$o_i$与$q_i$级联送入Feedforward network。

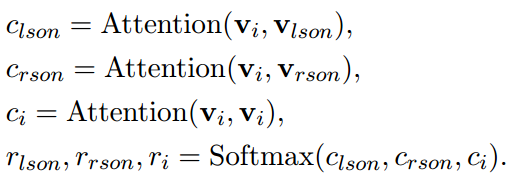

- Attention to Children

- Attention计算方法:

$$Attention(a,b) = tanh(\frac{1}{\alpha}SeLU((W_1 \times a)^T \times W_3 \times SeLU(W_2 \times b)))$$

- Loss:Masked Language Modeling + Phrase Node Prediction

Experiments

- Effectiveness of SentiBERT

- 证明BERT的有效性

- 证明Attention结构的有效性

- Transferability of SentiBERT

- Effectiveness and interpretability of the SentiBERT architecture in terms of semantic compositionality

- Semantic Compositionality

- Negation and Contrastive Relation

- Case Study: attention distribution among hierarchical structures

- Amount of Phrase-level Supervision

补充材料:Tree-LSTM

Improved Semantic Representations From Tree-Structured Long Short-Term Memory Networks 2015

- 标准LSTM

- Child-Sum Tree-LSTMs(Dependency Tree-LSTMs)

标准LSTM单元和Tree-LSTM单元之间的区别,一开始对于所有的子结点进行求个,并计算门控表示。适合子节点多,以及子节点无序的情况。

- N-ary Tree LSTM(Constituency Tree-LSTMs)

N-ary Tree-LSTMs与Child-Sum Tree-LSTMs不同在于,N-ary Tree-LSTMs不一样,它是对每一个孩子节点的隐藏状态分别计算然后求和。因为句法树的子节点数目不超过N且有序,所以可以学习得到参数U,可以得到更细粒度的表示。

by Mikito

by Mikito